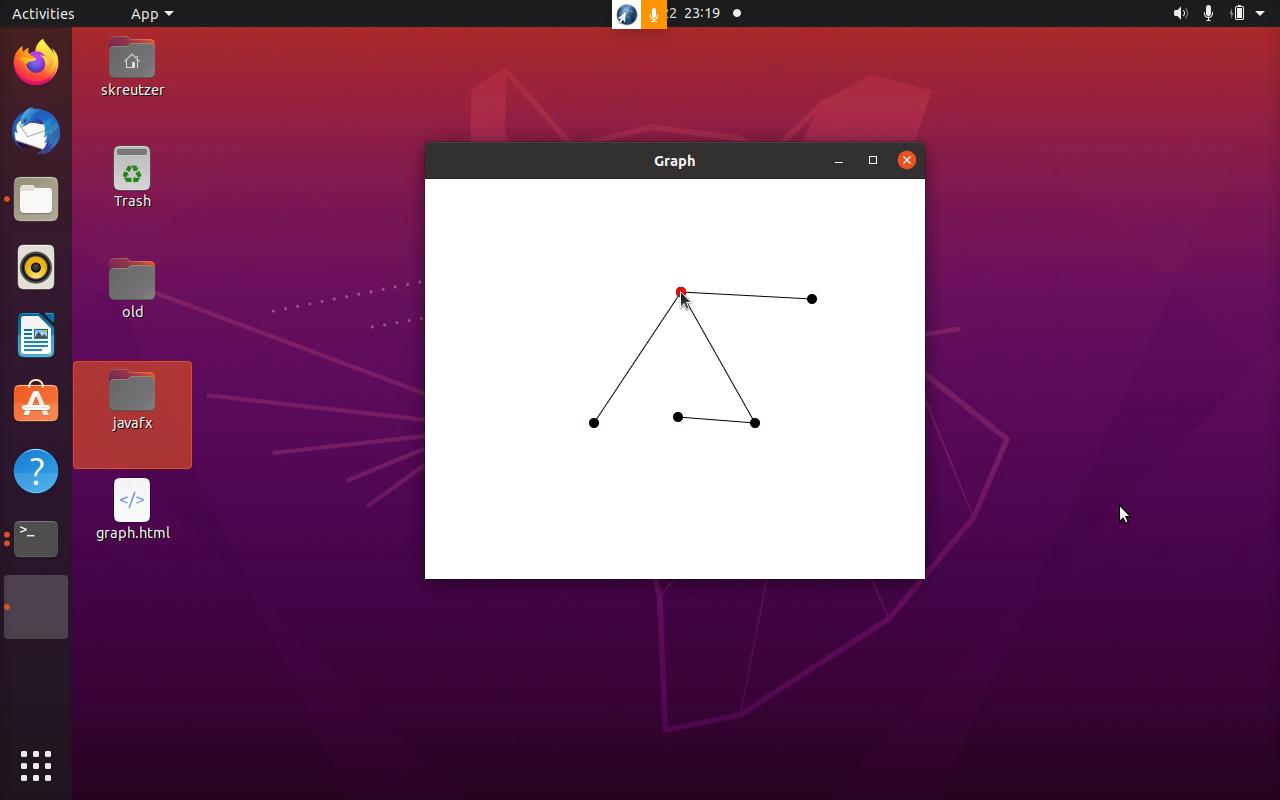

Tokenization & Lexical Analysis

Loading (deserializing) structured input data into computer memory as an implicit chain of tokens in order to prepare subsequent processing, syntactical/semantical analysis, conversion, parsing, translation or execution.

Category:

More From: skreutzer

More From: skreutzer

Related Videos

Related Videos

0 ratings

46 views

Want to add this video to your favorites?

Sign in to VidLii now!

Sign in to VidLii now!

Want to add this video to your playlists?

Sign in to VidLii now!

Sign in to VidLii now!

Want to flag this video?

Sign in to VidLii now!

Sign in to VidLii now!

| Date: |

Views: 46 | Ratings: 0 |

| Time: | Comments: 0 | Favorites: 0 |